k8s ingress with cloudflare tunnels

by Foo

So this is a pretty cool idea - and something that challenges common practices. Normally we deploy a service somewhere, put a loadbalancer in front of it and configure networking to allow ingress only through it (because you did configure your SGs properly, right? right? RIGHT???). But then, especially when you use some cloud edge provider like Cloudflare, you also have that in front of your loadbalancers, and the additional work to secure the network segment between edge and LBs. But what if you didn’t have to?

If you’ve not seen Cloudflare (previously Argo) tunnels before, their idea in the above scenario is simple: you ditch your loadbalancers, deny all network ingress and instead run a component in your environment (cloudflared) that opens an outbound connection to Cloudflare - which then acts as a reverse tunnel to route traffic from the edge to your services. I won’t spent a lot trying to sell this to you - Cloudflare sales folks already did the work in their product page, but here are two reasons I’m particularly interested in the approach:

- less stuff to think about: don’t have to manage LBs, SGs, (elastic-or-not) IPs, Ingresses, Services, Edge-to-LB-encryption-in-transit and a bunch of other pieces of plumbing

- architect smaller networking quanta: I can think of something as self-contained as a Pod as a fully enclosed network element, which is great to apply zero trust principles to your apps

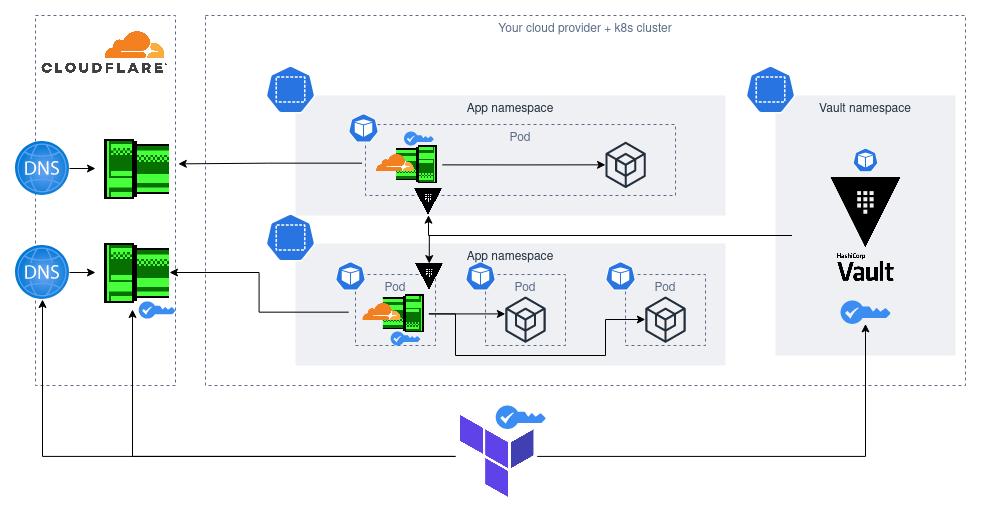

The overall design that we’ll be covering in the rest of the post.

Some practical things to know about tunnels

Won’t go through a step-by-step intro tutorial or this - no point in repeating the docs. But a few things to be aware of:

There are 2 “types” of tunnels

Locally configured and console-configured. I found this counterintuitive: I wanted to have an IaC setup, and since the docs seem to heavily hint at the console-configured tunnels being UI-driven, I thought the locally configured ones were way to go. It’s instead the opposite.

Locally configured tunnels are meant for manual management via CLI: tinkering, temporary experiments and suchlikes. In this mode cloudflared authenticates with both a file containing a tunnel-specific token for connection as well as a certificate that authenticates for Tunnels management operations. This is effectively a tunnel-admin type credential and fore sure you don’t want to have it sticking around deployments.

Console-configured tunnels seem presented as UI-driven, but in reality that includes the API - which is what a Terraform or Pulumi provider would call. In this mode, configuring a tunnel would involve a single tunnel token as a credential, only used to authenticate connection and no management operation.

Anatomy of a tunnel config

Here are the moving pieces of config in a tunnel setup, in dependency order:

Tunnel secret

Must be 32 bytes long before base64 encoding.

This is a client authentication credential, anyone in possess of this secret can start their own instance of cloudflared and “syphon off” some of the traffic that should get to your apps/networks.

Tunnel

Logical declaration of the tunnel in your CF account.

Has a name and a secret. If you go via UI the secret will be generated for you and shown as a one-time - the API expects you to provide it yourself, and by extension its Terraform incarnation cloudflare_tunnel does the same. The tunnel ID is important for further API-based configuration and becomes available after creation.

Tunnel config

Once the tunnel is created, you provide its config as a separate logical entity.

This includes, most importantly, the routing rules, specifying where would the client route the traffic to destination. You must have at least a default one that matches anything without a more specific rule, and can add configure subdomain and/or path-based rules on top. Adding rules from the UI will create the necessary CNAME records for you automagically.

You can also tweak a couple HTTP options, some L3 settings around keepalives and, most notably, the ability of rejecting all traffic that hasn’t been authorised via Cloudflare Access.

DNS record

If you want any external traffic to be routed through your tunnel, you’ll need a Cloudflare proxied CNAME entry for it. The bit of magic that ties things together is in the value: it has to be <tunnel id>.cfargotunnel.com and be set to proxied.

As stated above - creating rules from CF Zero Trust UI will generate missing DNS records for you, but it doesn’t allow to simply have a single name associated with the default routing rule, while configuring this via API. Adding the record manually from the DNS UI would also work.

CloudflareD

Last but not least - the client. This is simply configured with the straight up token as a CLI arg, or a config file containing it.

When using the client in this mode most other client-side configuration options become unavailable (as they are controlled by the API instead), with the exception of a handful of client-specific options of less interest.

Outbound ports

Where this gets configured depends on where you’re deploying cloudflared - ofc you’re not configuring LBs and ingress rules anymore but of course you’ll need a couple of egress rules instead. The necessary outbound connections are detailed in the documentation and for me they got translated into params of a CiliumNetworkPolicy.

A secret journey

The setup above is fairly slick - except for the secret token bit, ofc.

You generate it somehow, pass it to the API for tunnel creation, then need to make it available to cloudflared in whatever your execution environment is.

Of course there’s a high-cardinality amount of ways to encrypt this cat depending on how you’re configuring all of this, whether and which secret storage tech you have at hand and where’s your code running, I’ll just cover my case: Terraform, Vault Cloud (yes, the Hashicorp one) and a k8s cluster.

So for me there are 2 segments: generation (Teraform) -> Vault, then Vault -> App.

Terraform -> Vault

Secret generated with random_password. Put its .result into a vault_kv_secret_v2. Done.

Realise that any secret used as input param of a tf resource gets saved plaintext in the state. Undone.

Honestly, here after some research I just decided to suck it up for now in the interest in making the toy work, and hopefully will get to explore alternatives in the future. Cloudflare’s API update tunnel operation supports updating the token - so maybe a Vault plugin that rotates it, rendering the one in statefiles useless, could be an idea?

I’ll just mention for completeness but avoid going in too much detail: of course to store the secret in vault you’ll need to configure a role to authenticate to it and a policy that allows you to write to your chosen kv path. In my case I’m doing Terraform CLI local runs (so cloud state, local client) in a shell where I authenticated to a target-app-specific role via Auth0 with an OIDC browser flow. Pretty neat.

Vault -> Application

My k8s cluster runs Vault agent sidecar injector, which makes it pretty easy.

You just need to add a bunch of very overly verbose annotation to your Deployment’s yaml config and secrets get injected for you. Authentication to Vault is handled via ServiceAccounts. Secrets will be rendered in templated files in the Pod’s filesystem.

Nothing special or complicated here once you have the Vault setup going - just time consuming and annoying to figure out a couple of not-so-well explained bits:

- the way you reference Vault paths for injection is wildly inconsistent across secret engines and cli/injector and not explained at all

cloudflared’s help command and error messages don’t make it super clear that you can have the token in a config file, and not just via the--tokenconfig option (Vault injects only to FS)

Show me the tf

What I got out of this looks, all in a single file, roughly like the following listing. Mine is split in a couple of separate stacks for infra repo design reasons but on this of course you do you.

terraform {

required_version = ">= 1.4"

required_providers {

cloudflare = {

source = "cloudflare/cloudflare"

version = "~> 4.1"

}

random = {

source = "hashicorp/random"

version = "3.4.3"

}

vault = {

source = "hashicorp/vault"

version = "3.10.0"

}

}

cloud {

organization = "your-tfcloud-org"

workspaces {

name = "tunnels-or-however-you-want-to-name-this"

}

}

}

provider "cloudflare" {

}

provider "random" {

}

provider "vault" {

# you might have different options depending on your vault setup ofc

address = "https://whereyourvaultis.example.com"

ca_cert_file = "../in-case-you-have-a-self-signed/vault.crt"

}

locals {

account_id = "copy this from the URL in your CF dash. make it an input instead of a local if you plan to reuse the stack across different CF accounts"

}

resource "random_password" "tunnel_secret" {

length = 32

}

resource "cloudflare_tunnel" "my_tunnel" {

account_id = local.account_id

name = "my-tunnel"

secret = base64encode(random_password.tunnel_secret.result)

}

resource "cloudflare_tunnel_config" "my_tunnel" {

account_id = local.account_id

tunnel_id = cloudflare_tunnel.my_tunnel.id

config {

ingress_rule {

# for cloudflared running as an in-pod container, phoenix app listening on its default 4000

# for an in-namespace cloudflared running in its own pod you can refer to other Services with http://my_service:port

# you can also go cross-namespace with k8s's internal DNS syntax <service>.<namespace>.svc.cluster.local

service = "http://localhost:4000"

}

}

}

resource "vault_kv_secret_v2" "my_tunnel_secret" {

mount = "secret"

name = "prod/my_app/cloudflared"

data_json = jsonencode(

{

tunnel_token = cloudflare_tunnel.my_tunnel.tunnel_token,

tunnel_id = cloudflare_tunnel.my_tunnel.id

}

)

}

# I'm assuming you already configured this elsewere - use the resource instead if you need to create the zone

data "cloudflare_zone" "zone" {

account_id = loval.account_id

name = "example.com"

}

resource "cloudflare_record" "record" {

zone_id = data.cloudflare_zone.zone.id

type = "CNAME"

name = "my_app" # use @ for root of zone

value = "${cloudflare_tunnel.my_tunnel.id}.cfargotunnel.com"

proxied = true

}

Then the pod containing cloudflared needs to look like this for an in-pod deployment:

apiVersion: apps/v1

kind: Deployment

metadata:

name: my_app

spec:

selector:

matchLabels:

app: my_app

replicas: 1 # I'm going cheap - don't do 1 if you have the moneyz

template:

metadata:

labels:

app: my_app

annotations:

vault.hashicorp.com/agent-init-first: 'true'

vault.hashicorp.com/agent-inject: 'true'

vault.hashicorp.com/role: 'my-app-specific-vault-role'

vault.hashicorp.com/agent-inject-secret-cloudflared_config.yml: 'secret/data/prod/my_app/cloudflared'

vault.hashicorp.com/agent-inject-template-cloudflared_config.yml: |token: vault.hashicorp.com/ca-cert: "/run/secrets/kubernetes.io/serviceaccount/ca.crt"

spec:

containers:

- name: my_app

image: registry.example.com/my_org/my_app:tag_ideally_immutable_and_with_@sha256...

ports:

- containerPort: 4000

volumeMounts:

- name: tmp

mountPath: "/tmp"

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsNonRoot: true

runAsUser: 10001

runAsGroup: 10001

- name: cloudflared

image: cloudflare/cloudflared:2023.3.1

args: ["tunnel", "--config", "/vault/secrets/cloudflared_config.yml", "run"]

volumeMounts:

- name: tmp

mountPath: "/tmp"

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsNonRoot: true

runAsUser: 10001

runAsGroup: 10001

serviceAccountName: my_app # important: used to authenticate to vault

volumes:

- name: tmp

emptyDir:

medium: Memory

or this for an own-pod deployment:

apiVersion: apps/v1

kind: Deployment

metadata:

name: my_namespace-argo-ingress

namespace: my_namespace

spec:

selector:

matchLabels:

app: my_namespace # for me even in its own pod it's still _kinda_ part of the app in that namespace as a whole...

replicas: 1

template:

metadata:

labels:

app: my_namespace

annotations:

vault.hashicorp.com/agent-init-first: 'true'

vault.hashicorp.com/agent-inject: 'true'

vault.hashicorp.com/role: 'my_namespace-app-specific-role'

vault.hashicorp.com/agent-inject-secret-cloudflared_config.yml: 'secret/data/prod/my_namespace/cloudflared'

vault.hashicorp.com/agent-inject-template-cloudflared_config.yml: |token: vault.hashicorp.com/ca-cert: "/run/secrets/kubernetes.io/serviceaccount/ca.crt"

spec:

containers:

- name: cloudflared

image: cloudflare/cloudflared:2023.3.1

args: ["tunnel", "--config", "/vault/secrets/cloudflared_config.yml", "run"]

volumeMounts:

- name: tmp

mountPath: "/tmp"

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsNonRoot: true

runAsUser: 10001

runAsGroup: 10001

serviceAccountName: my_namespace

volumes:

- name: tmp

emptyDir:

medium: Memory

And let’s not forget to allow egress, this example for the own-pod deployment:

apiVersion: cilium.io/v2

kind: CiliumNetworkPolicy

metadata:

name: my_namespace-network

namespace: my_namespace

spec:

endpointSelector: {}

ingress: # this bit is for some pod-to-pod communication

- fromEndpoints:

- matchLabels:

app: my_namespace

toPorts:

- ports:

- port: "80"

- port: "8080"

egress:

- toEndpoints:

- matchLabels:

io.kubernetes.pod.namespace: kube-system

k8s-app: kube-dns

toPorts:

- ports:

- port: "53"

protocol: UDP

rules:

dns:

- matchPattern: "*"

- toEndpoints:

- matchLabels:

app: my_namespace-frontend

- matchLabels:

app: my_namespace-backend

toPorts:

- ports:

- port: "80"

- port: "8080"

- toEndpoints:

- matchLabels:

io.kubernetes.pod.namespace: vault

app.kubernetes.io/name: vault

toPorts:

- ports:

- port: "8200"

- toFQDNs:

- matchName: api.cloudflare.com

- matchName: update.argotunnel.com

toPorts:

- ports:

- port: "443"

protocol: TCP

- toFQDNs:

- matchName: region1.v2.argotunnel.com

- matchName: region2.v2.argotunnel.com

toPorts:

- ports:

- port: "7844"

# protocol: TCP + UDP

If you’re in-pod there’s no need for the ingress and the same-namespace egress bits. In my setup I don’t have anything else to do but if you’re e.g. on AWS you’ll likely need to add a couple egress rules to your EKS’s SecurityGroups as well.

Layout

I mentioned in-pod and own-pod deployments but of course there’s other ways of making this work too. Your own-pod can be one per namespace, one for the whole cluster, or anything else in between, like a one-that-feeds-into-a-service-mesh kinda thing.

Which one should you choose? Frankly, I don’t know. Yet. I’ll reserve further exploration of layouts and their merits for a future post, but here are the couple things I can say so far:

- To route to a single-pod app, an in-pod container is probably the simplest.

localhostnetworking, less network config, less yaml for deployment. - To route to a multi-pod app (e.g. 2-pod backend/frontend routed path-based on

/vs/api/v0/**), it’s easier to have an own-pod in same namespace as the app.

Network tunnels

All of the above is about direct-to-app kinda tunnels - but you can also use them to be a bit like a site-to-site VPN and give you access to an entire network. I didn’t play with that functionality at all and I’m not sure I’d recommend it as a starting point as zero trust architectures are better if designed in terms of very specific, fine grained connections to endpoints and apps rather than network zones.

But it’s there if you need it - I’m sure it can be handy for a gradual migration path from traditional VPN.

Scalability and reliability

All of your traffic now goes through a single client.

What’s the network cap? How often does it fail? Does it scale if I deploy more?

I don’t know. Again, yet. All I’ve done so far is a small setup for a small app in a small cluster, so a 1x instance is probably enough - but in a real environment the story might be entirely different. To date I have no expertise to contribute on this topic.

Where to go from here

If you’ve followed this, congrats! You’re now running your own Cloudflare tunnel and saving a handful of $$$ on loadbalancers.

From here, the logical next step is to look at Cloudflare Access to build some zero-trust goodness on top of your tunnel. Other ideas involve looking at a way to avoid having tunnel secrets in Terraform config, or looking at how to make the tunnel more scalable and reliable.

Have fun!

tags: Cloudflare - Security Engineering - k8s - Edge - CloudSec - Networking - Architecture