First steps with Semgrep rules (ft. GHA concurrency & Dependabot)

by Foo

It’s been on my radar for a long time, but finally I got to have a little poke at writing custom rules for Semgrep.

I maintain a learning setup with a kubernetes cluster and a handful of microservices that deploy on it. The repos and permissions are deliberately set up to emulate the presence of separate collaborating teams, like a delivery team distinct from a platform and security teams.

I’ve had the Semgrep app enabled on all application CI pipelines for a while, with default rulesets. The main goal of that experiment was to understand what’s the user experience from the point of view of a security team owning the tool, as well as what’s the feedback loop experience for engineers.

And that’s fine but not that interesting - after all the market is saturated with similar tools. But what really sold me on looking deeper into Semgrep is that it really makes the rule writing a first class citizen. Most tools focus on the “write-scan-fix” or “scan-triage-filter” feedback loops focused on engineering and vulnerability manager personas, but rule writing adds a new one: “respond-codify-control”, opening up to use cases such as incident responders acting on an incident post-mortem, threat hunters correlating suspicious logs with risky rules on security groups, engineering communities agreeing standards or, really, anyone wanting to write continuous improvement into code.

Anyway, enough with the sales pitch, here’s the story: I use GitHub Actions for my pipelines.

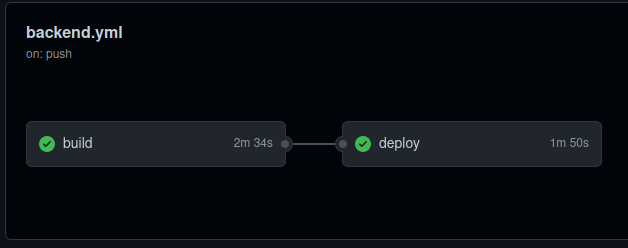

Picture some reasonably simple stuff for a microservice (let’s say Java/Spring Boot) which runs build and tests in a Docker container,

pushes it to a registry tagged with git sha, then updates a kube Deployment with the new tag. What can possibly go wrong?

Yep, this simple.

And like most simple things, it breaks in horridly subtle ways when you introduce concurrency.

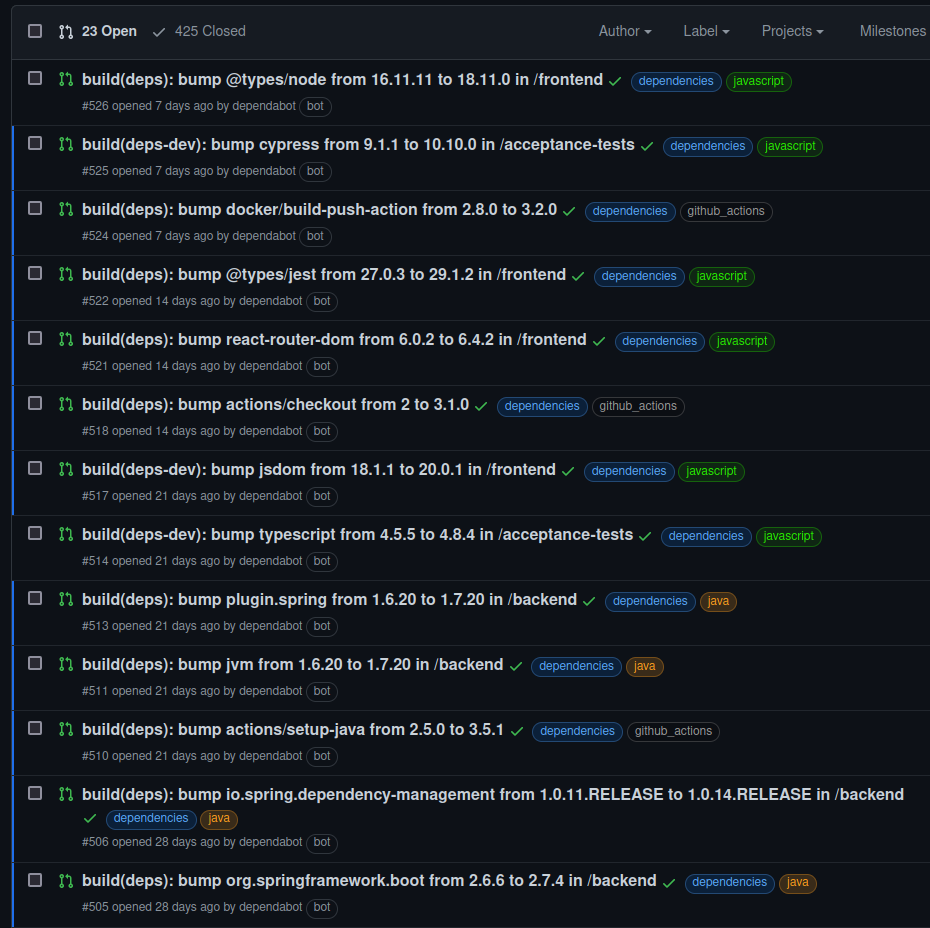

Little aside: you probably heard about Dependabot, the friendly robot thing that opens a million PRs/s on all your projects to help you stay

on top of updating your always sometimes vulnerable deps (when configured right! Do set a .github/dependabot.yml file!).

And you probably know that sometimes these PRs can pile up if you don’t look for a couple days.

Like this.

Now, GitHub also has a mobile app and here’s why I love it: I can merge all these Dependabot PRs from the loo.

Brilliant.

But how’s this related to build concurrency, you might ask? Well you see, when the delightful technological advancements of the modern age

accelerate your PR-merging-on-the-loo to such ludicrous speeds, what happens it that there’s a lot of on.push.branches: [main] events

firing off fairly close in time, each one triggering a workflow run concurrently.

With enough variation in execution time, coming from scheduling and load on worker nodes, what can happen is that the workflow from the

first merged PR ends up starting first but being the slowest, and executing the deploy command last.

At that point, from the perspective of the cluster, a Deployment with that build is effectively considered the most recent while, in fact,

it’s missing a bunch of commits.

Is this a big deal? In this scenario maybe not particularly - we’re speaking a bunch of Dependabot PRs, perhaps for minor releases. But consider this: sometimes this minor releases are made for critical security fixes. And even in different scenarios, what about the time wasted investigating the root cause for an incident, for hours looking at what should have been in production, only to realise that you’ve been inspecting the wrong changeset? I’d be livid!

Enough about the problem: whaddyagonnadoaboutit?

Simple: GHA has a feature called concurrency (who would have guessed!): essentially you tag a workflow or a job with an arbitrary string, and that string value acts as a kind of mutex: only one job tagged with the same value can execute at the same time. In the simplest incarnation, it translates to these two lines at the top level of a workflow file:

# .github/workflows/somethingsomething.yml

concurrency:

group: prod-deploys

or the single line version:

concurrency: prod-deploys

Now to be completely Frank, because Frank likes thinking about corner cases, I actually have no idea of what happens if you leave that string (or sub-object) blank, but for sure nothing good:

concurrency:

# or

concurrency: {}

# or

concurrency:

group:

so we’d rather avoid it.

Now, having a round of all workflow files we have today and and pasting these 2 lines if needed is a trivial, menial exercise.

But as GHA workflow files are the kind of self-authored thing that changes constantly and new ones can and will be added at any time, for

any new service/project/repo/weekend-pet-project-with-a-single-commit-then-never-again-looked-at, we’re not in the space of a clearly scoped

initiative with a beginning and an end: we’re in the space of continuous detection, prevention and remediation over time.

We need to be able to detect workflow files, now and in the future, which could incur in race conditions if parallelised,

check whether they configure (or misconfigure!) concurrency and flag them for remediation.

Enter Semgrep.

Semgrep is a SAST, running client-side, covering multiple programming languages low and high level including everyone’s favourite, YAML, featuring the ability to write custom rules as a first-class feature (rules are actually written in YAML, so joke’s on me here). You read the rest of the pitch on the fourth paragraph already (did you?), so to the point: we can use it to detect misconfigured concurrency.

I would have much liked to approach this by giving a skim to the docs for an overview, but I just came off a few weeks of reading Phoenix and Vault docs, so I kinda hard enough of reading and just launched myself into the live editor instead. The cool thing is: all registry rules are browsable from there, so you can have a learn-by-copying-from-existing kind of experience.

So first of all I found something in the yaml > github-actions section that sounded like what I was after, and started from there:

I think I picked semgrep-github-action-push-without-branches as a starting point.

Couple of things are evident from it:

- pervasiveness of usage of the

...operator to define structural matching - difference between

patterns:(AND) andpattern-either:(OR) - wtf is

pattern-inside:even

on this last one, looking around a couple other rules, turns out pattern-inside: is actually an important construct to grasp, and for that I had to refer to the docs.

Essentially, you use it with a relatively loose structural pattern in AND with a more specific one: together they end up meaning “match the specific patten, but only

when it’s in the context of the looser one”.

So let’s give it a try: first of all, I need to find out whether the workflow is prone to problems when parallelised or not. It’s for sure a low-fidelity proxy, but as a starting point we can check whether there’s a job called “deploy”. To translate this in rules, we also need to use the “metavariables” feature, which captures a block of code for further matching, in this case with a case-insensitive regex:

patterns:

- pattern: |

jobs:

...

$JOBNAME:

...

- metavariable-regex:

metavariable: $JOBNAME

regex: (?i)deploy

This sets the overall context for understanding whether the workflow file at large is interesting or not.

Now what we want to figure out is whether the file is lacking a concurrency option: the first guess would be to simply AND the condition:

patterns:

- pattern: |

jobs:

...

$JOBNAME:

...

- metavariable-regex:

metavariable: $JOBNAME

regex: (?i)deploy

- pattern-not: |

concurrency: $CONCURRENCY

But it simply doesn’t work, and I don’t really get why. Nor it does for the non-variable formulation concurrency: asd (with that specific value being the

literal same as the test case used), and neither does

concurrency:

...

Why this happens is beyond me. I suspect I don’t fully understand how the context work when ANDing patterns, but a quick skim in the docs didn’t get me an answer (in fairness, I might have skipped over a crucial paragraph without realising) and the following hour of attempts didn’t lead me to any solution, only more confusion: turns out you can’t express “not this nor that” nor “this or not that”. In rules, the following two are both invalid:

patterns:

- pattern-not: this

- pattern-not: that

# nor

patterns:

- pattern-either:

- pattern: this

- pattern-not: that

I’m sure that with enough applications of De Morgan’s law and friends you can do some clever stuff and flip the conditions around but… I was a bit annoyed about this, to be honest.

So what followed this discovery was a couple hours of relentless attempts to express “within a yaml with a job named ~deploy, either without concurrency or with one of the three forms of misconfigured concurrency”. I’ll spare you all the attempts also because, frankly, I can’t remember exactly what I tried and in which order. But in the end, I found it easier to split it in two rules: the first, detects where concurrency is not set and it should, the second, assuming that you did specify a concurrency: key, checks that the value you set is reasonable.

The latter looks like this:

pattern-either:

- pattern: |

concurrency:

- pattern: |

concurrency:

group:

...

Simple enough, right?

And the first one, extended to detect actions running terraform apply by looking at their run: commands:

patterns:

- pattern-either:

- patterns:

- pattern: |

jobs:

...

$JOBNAME:

...

- metavariable-regex:

metavariable: $JOBNAME

regex: (?i)deploy

- patterns:

- pattern-inside: "steps: [...]"

- pattern-inside: "run: ..."

- pattern: "run: $TF"

- metavariable-pattern:

metavariable: $TF

language: generic

pattern: terraform ... apply

- pattern-not-inside: |

...

concurrency: $CONCURRENCY

...

As you can see, beyond matching metavars by regex it’s also possible to process them with stuff like the ... operator itself.

Confused? Me too. There is some stuff that I tried at various points and though it should totally be a match and it wasn’t - and I don’t get why.

I suspect that a lot of that are specific to how YAML is processed and normalised before matching, and it wouldn’t be an issue if this was some other language.

To come up with such a long chain of pattern-inside, that still looks weird to me, I took inspiration from other rules from the library - the ability

to peek around and see what worked in other cases, pick it and try it against your test cases is very powerful. The feeling that I got from this is that

it’s much easier to express a rule that finds something rather than a rule that finds its absence.

With the rules saved in my editor and added to the Rules board, they now evaluate against my action and correctly detect the misconfigurations, yay!

Also, after logging in via the CLI on my local machine, I can run them individually specifying semgrep -c s/my-org-or-account-name:rule-name. Awesome!

Lastly, I tried to figure out whether I can have these rules specified as code rather than in the web editor, but I found no obvious way of doing so. It’s straightforward to replicate the same development process locally, simply executing the rule from file against a test case file, but to then upload it in your org registry you still have to to via the web editor. I suspect that the ability to sync rules are (or will be) part of the paid offering rather than the free tier, which I’m using now.

In conclusion:

- I like the tool

- I dislike YAML

- it’s easier to find stuff than to find not stuff

- there’s great potential to do a lot more than the silly stuff I presented here

and I hope you found even a syllable of my rambly story useful.

Have fun!

tags: Semgrep - Security Engineering - SAST - GitHub Actions - Dependabot - AppSec